Amdahl’s law and agentic coding

AI coding is providing good examples of Amdahl’s law at work.

What is Amdahl’s law?

Eponymously coined in 1967 by computer engineer and entrepreneur Gene Amdahl, the law states that the speedup you get from making part of a system faster is limited by how much time that part represents in the total runtime of the overall system. One way of looking at it is as an expression of the concept of diminishing returns.

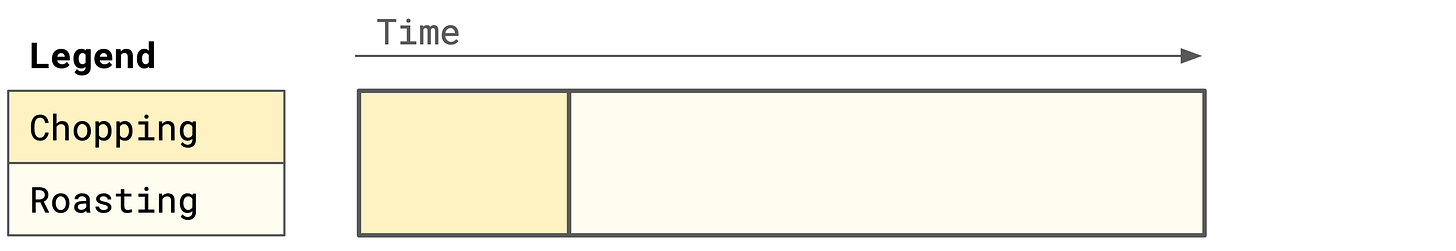

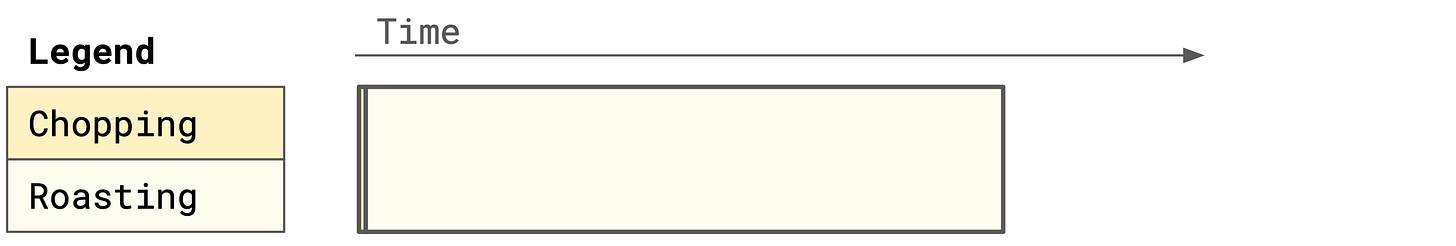

Consider an example from the kitchen. Imagine you’re making a recipe that has you chop vegetables for 15 minutes and roast them for 45.

You decide to buy pre-cut vegetables, which brings the prep time down to the one minute it takes to plop everything onto a pan. Huge speedup, right?

Amdahl’s law points out that, while prep now takes 1/15th the time, the overall time to cook the recipe is still dominated by the cooking time. Overall, time-to-cooked-meal is only down 23%.

When you optimize a single part of a sequential process, the time the process takes ends up being dominated by its other parts. You whacked a mole, but more moles remain.

What does this have to do with AI?

AI coding tools allow us to produce code faster, but for most projects of meaningful size, it turns out that the writing of the code wasn’t the bottleneck.

Once the code-writing part is sped up, you’re left with slowdowns in the other parts of the software development lifecycle.

Figuring out what should be built in the first place requires talking to customers, characterizing bugs, or identifying new ideas to prototype.

Beyond automated testing, you probably still want to click through UI flows locally before putting up a PR.

You might still want at least some degree of the human element in the code review process.

Deploying software at scale takes time, provided you want to do it safely.

While LLMs write code quickly, they also write bugs quickly. This can mean more roundtrips through the development cycle before you’ve actually built what you want.

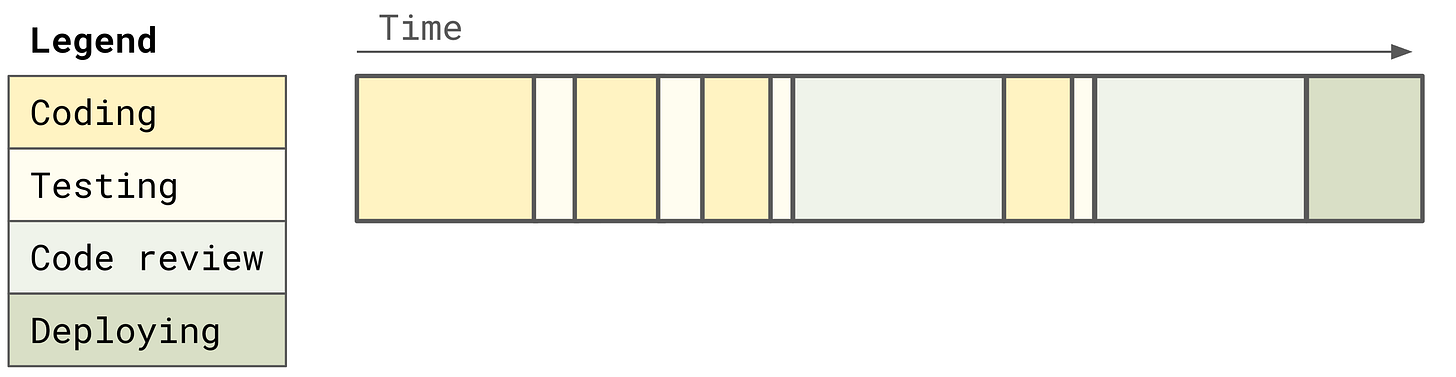

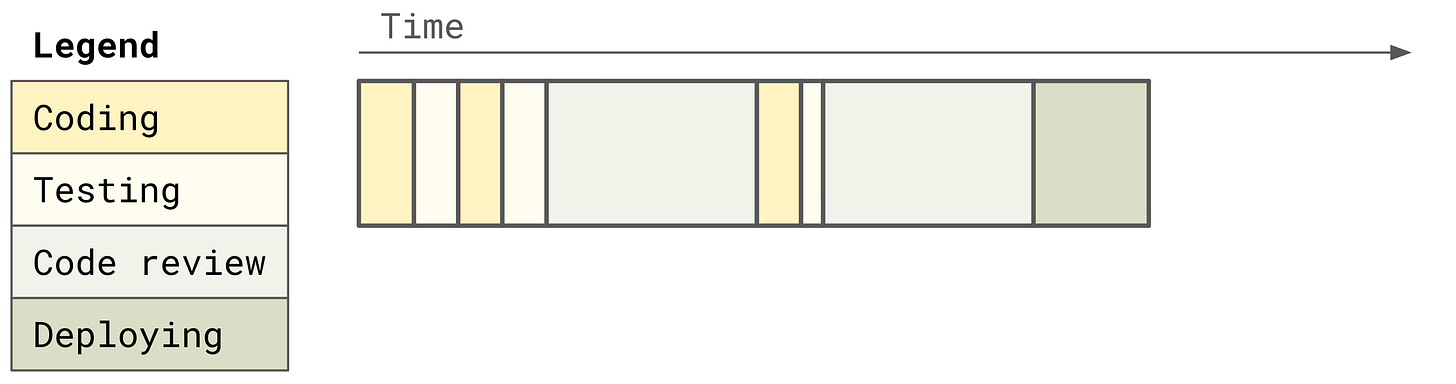

Consider the following pre-AI lifecycle of a new feature being developed.

If we have Claude or Cursor write the code, we can potentially save some time and roundtrips in the code-writing parts of the lifecycle. But now our code review and deploy processes are the glaring bottlenecks.

For established companies (in contrast to newer companies using AI from day one), adopting agentic coding ends up exposing underinvestments in developer tooling. A slow build tool is now much more of a painful hurdle to getting PRs out. A CI system that takes 30+ minutes to run becomes an unacceptable bottleneck in the system. Industry standard code review processes can’t keep up with the volume of code being changed.

What’s to be done about it?

In the grand scheme of things, these problems are probably temporary growing pains amid the shift to agentic coding. We’ll figure out ways to evolve each step in the software development lifecycle, resulting in a more streamlined overall flow from idea to deployed software. Undoubtedly, part of the solution is to use agentic coding to perform this evolution.

In the meantime, for companies founded before ~2025 that find themselves in an existential crisis over how to successfully adopt AI coding, the solution lies in making the right investments in tooling and infrastructure. Listen to your engineers when they try to tell you what’s slowing them down. Don’t just say to “use AI more”—work with them to identify where the specific bottlenecks now lie. And then fund projects to streamline them.

I'd love if LLMs could cut the cost of coding to 0, even if coding was only 10% the cost. That hasn't yet been my experience though, at least not without compromise on the other costs involved.

I tend to find that the cost of landing features and projects is seldom the coding though: it's a cost in understanding the problem the feature solves, aligning on value adds with other stakeholders, simplifying the issue, finding the right home for the new complexity, and then writing the code. Skipping right to the end by turning an idea into an app can feel like a cheat code, but then working backwards to understand the why can be so much more painful and arduous.

I've played with agentic coding (and seen enough PMs or other folks do the same) to know the familiar story. Someone wants a recipe builder that can describe how to make pancakes with an input of what they have on hand. The LLM creates a fairly impressive UI that returns a recipe, where to get the remaining ingredients, and offers a bevy of well-written choices on whether banana or chocolate should be the pick of the day. The user gets their working project, and it looks like it did it all without any "coding."

Of course that example is not what professional software development looks like and enthusiastic people, excited by the instant creation of their recipe software, see the time spent by engineers slowly "producing code" as inefficient and better optimized in this model. Why are we thinking ahead so much about all these decisions (or thinking at all) if we can just query the genius of a machine that will know more than any of us ever will?

Over the last year I've seen our engineering processes attempt to be deconstructed to better fit with the agentic coding pillars (vibe code was the sophmoric term for it in the past, the big companies pushing for this style have given it a suit and haircut and the title to match). Each time those engineering processes get tested and fail it's a bit of a hubbub and row about what the issue is, there's evidence that the recipe builder worked, why can't that scale to our app/webpage/API?

At some point I'm sure we'll reach a stable state where exploration opportunities that are well-suited to fast and error-prone development by LLM tools are well-understood and the tools aren't overwrought by someone trying to build a browser in a week. Until then it's a classic tale of non-eng thinking that engineering is primarily "coding" despite the actual reality being that I spend probably less than ten hours a week with an IDE open (and more of that time is spent reading than writing). Though I guess that's better than the reverse, us eng not understanding what it is those biz people actually do.